Why Everybody Is Talking About Learning And Development In Organisations...The Simple Truth Revealed

Free Intelligent Conversation Manifesto

Intelligence is a process of acquiring knowledge, not an achievement.

Knowledge is a resource that is dispersed amongst the members of society and manifests itself in different ways. An intelligent conversation is one where we gain knowledge from whom we are speaking with. Making intelligent conversations a regular part of our lives requires us to continually seek to learn from the people we encounter. The process of seeking to learn from those we encounter will provide us each with the greatest intelligence.

Intelligence is often perceived as unidimensional and we tend to determine how intelligent a person is based on how much they know or achieve by society’s standards. These standards often oversimplify how we perceive intelligence because they fail to notice how knowledge can be gained from personal life experience. By overvaluing these typical measures of intelligence, we undermine the process of gaining knowledge through lived experiences. Intelligence has many forms, and it is a mistake to place significant value in one form while depreciating the others.

With Free Intelligent Conversation we want to learn from everyone we meet and believe that the value of our individual insights is reason enough to seek each other out. We believe that the most socially responsible, noble, and honorable thing to do with the knowledge one has acquired is to share it with other members of society, in an attempt to benefit humanity. We believe that by continually seeking to learn from others we can improve ourselves and the world around us.

Social Freedom of Speech is an understanding that anything can be said and everyone gets the benefit of the doubt.

The exchange and evaluation of ideas is our greatest mechanism for individual and collective development. The freedom to think and express oneself permits opportunities for the truth to surface and prevail in one’s life. Exchanging ideas with others is also therapeutic, as it provides an outlet for thoughts causing internal dissonance. Individuals who exchange ideas are more dynamic; their minds are breeding grounds for new ideas, innovations, and introspection. By repeatedly engaging with other ideas, we refine our own thought process and allow ourselves to fully reach our truest potential.

Despite the benefits of exchanging ideas, we perpetually hinder this process by unwittingly discouraging people from sharing their thoughts. In our day-to-day interactions, people are often apprehensive about speaking freely due to a fear of unfavorable judgement and punishment. A fear rooted in the suspicion that the participants involved are keen on making a rash judgement more than they are willing to listen and understand. Allowing this censorship to carry on is detrimental to our well-being, as we will squander opportunities to learn from one another and improve our lives. The act of engaging in free dialogue with others is so beneficial that it should be regularly encouraged, continuously sought out, and practiced routinely.

With Free Intelligent Conversation we create sacred spaces where no topic is taboo and participants know they can speak freely and without the fear of harsh judgement. A place where people can be vulnerable and unapologetically speak their truths. A playground where ideas can interact, be challenged, and refined. A venue where people seek to reach their full potential and help others do the same through dialogue. A place where, no matter what is said, participants strive to communicate with each other respectfully. A place of conversation, not quarrel.

Free Intelligent Conversation promotes social freedom of speech: an understanding between communicating parties that the environment is one where anything can be said and everyone gets the benefit of the doubt. In creating these places we also hope to create a culture of people who are willing to talk to anyone about anything. A culture willing to talk about the uncomfortable and unfamiliar. A culture welcoming people they disagree with and inviting them to speak freely. A culture that can listen to others’ opinions without needing to convince them of their own. A culture of people who acknowledge their own ignorance and can disagree, without becoming adversaries.

Free Intelligent Conversation actively creates outlets for others to share their most intimate and deeply felt thoughts. We are devoted to defending environments that encourage people to share whatever is on their mind. We want to inspire others to speak freely by encouraging social freedom of speech.

Our differences should be distinctive, not divisive.

The shared perception that our differences are significant is more responsible for our inability to collaborate than the differences themselves. The focus on our differences — whether social, cultural, racial, or economic — is distorted. This distortion has led us to be, at times, overly divisive; sometimes causing us to treat people as stereotypes, not individuals. We often perpetuate this divisiveness out of shyness, uneasiness, and fear of vulnerability. To avoid discomfort, we surround ourselves with like-minded individuals.

While it is convenient and at times necessary for us to congregate with people like us, we must make a regular practice to seek out those we label as different. Otherwise we’re at risk of developing a myopic understanding and depriving ourselves of potentially transformative experiences. We will also miss out on the joy and growth associated with learning something new. Discomfort is a small price to pay for knowledge, and not paying this fee leaves us exposed to the larger penalty of negative stereotypes, prejudice, and bigotry.

Free Intelligent Conversation believes in paying the price of knowledge. We believe our differences should be distinctive, not divisive. We believe it to be in our individual and collective interest to inform ourselves about each other’s differences. Learning about other perspectives not only helps us understand one another, but also increases self awareness. The differences between things are best revealed in high contrast. We can never truly know what makes us unique without context and people to benchmark against. We can only begin appreciating and learning from others when we come to see them as individuals. Seeing people as individuals involves two conscious choices: the first is to approach without prematurely categorizing them. The second, is to listen as if you have something to learn from them. In our current sociopolitical climate — given global tension over refugees, immigration, racism, hatred, and harassment — seeing people as individuals has revolutionary implications.

We believe the best way to learn from our differences is to engage in conversation. Conversation is our greatest tool for collaborating in an open-ended way. Through successfully attempting to have conversations with people who have different views, we lay the foundation for goodwill and empathy amongst each other. Free Intelligent Conversation creates places where we seek out, learn from, and celebrate each other’s differences through conversation.

Cities are enhanced when the communities within are connected.

Half of humanity — 3.5 billion people — lives in cities today and this number is expected to grow. Cities are centers of activity for ideas, commerce, productivity, science, and social development. Cities provide the best opportunity for social and economic advancement. To capitalize on the advantages that cities offer there must be ongoing opportunities to convene, collaborate, and converse amongst the various communities within. In a city there are plenty of activities, but few public places that actively facilitate fellowship.

Along with the individuals who live in these growing communities, tourists also commonly interact with cities. Aside from work-related purposes, people primarily visit cities to have a semblance of what life in that city may be like. Despite this desire, visitors often leave cities without ever having tapped into its culture. Even the most intentional of visitors are likely to struggle finding individuals from the city who are willing and able to interact. As a result, visitors often find themselves shopping and eating at the same chain franchises they frequent at home — all while leaving much of the city unexplored, and fail to experience what it truly has to offer.

With Free Intelligent Conversation we believe that the most profound way for a visitor to experience a city is to spend time in conversation with people from the city, as locals have the best insight on what’s to love about the city. We create public places of active fellowship, where residents and tourists can meet and have meaningful conversations.

We are aligned with the United Nations goal to create Sustainable Cities and Communities — the 11th goal within the 2030 Sustainable Development Goals — which plainly stated is: “To make cities inclusive, safe, resilient and sustainable.”

Free Intelligent Conversation creates opportunities for socio-economic mixing and generates positive contact between people of different social groups, by means of meaningful face-to-face conversations in public spaces. Our approach is an innovative and inexpensive solution to building sustainable, vibrant cities. With Free Intelligent Conversation we believe we will vitalize business, friendship, and community development and create more inclusive, safe, resilient, and sustainable cities.

Meaningful conversations catalyze long-term changes in behavior, attitude, and/or perspective.

Small-talk is best used as a bridge to meaningful conversations and should not comprise the core of a discussion. Though at times necessary, small-talk is mostly unmemorable and leaves participants uninspired. It may even become a burden that causes unnecessary anxiety in undesirable but socially mandated interactions. To combat the hollowness of small-talk we must be intentional about our attitudes when talking to one another, as that is the best way to transform our empty dialogues into meaningful conversations.

Meaningful conversations are conversations that catalyze long-term changes in behavior, attitude, and/or perspective. They are the conversations that call us to be vulnerable and empathetic. They call us to speak our truths and also to hear the truths of others — the truth about their experiences and their ideas. The truth about who they are, and why they are. Meaningful conversations are the most desirable conversations, yet we constantly pass up the opportunities to have them.

With Free Intelligent Conversation we want to catalyze a culture that encourages people to seek out meaningful conversations. We minimize small-talk and trivial pleasantries, and instead prioritize learning about the underlying ideas and stories that compose people’s unique identities. We encourage others to discuss the things that excite, scare, and move them.

In our society, there are few places designated to creating and having meaningful conversations. In an attempt to satiate our desire for meaningful interactions we sometimes resort to trivial social gatherings, awkward parties, and dull evenings at the bar or club, hoping to have an interesting conversation.

With Free Intelligent Conversation we create public spaces for meaningful interactions. We create places where conversations happen for conversations’ sake. A designated space where nothing is being sold, and no one is pushing a religious or political agenda. A public place one can reliably turn to for connecting with people. A place where, by virtue of being present, you indicate that you are interested and willing to talk to anyone about anything. A place where people can be unapologetic about their appetite for talking to, connecting with, and learning from others.

To this end, Free Intelligent Conversation intends to first inspire a culture of people that seeks out meaningful conversations, and then create public places where they can happen.

Face-to-face interaction improves our ability to communicate and connect with people.

For the first time in history, we are immersed in the digital world and detached from the real one. In the age of digital technology, it’s difficult to tell if we interact more through our screens than we do in person. The majority of our conversations — business or personal — take place via text message, email, instant message, and social media platforms. Our advances in technology have made communicating far more convenient, but with a price: with our increased dependency on technology, we decrease opportunities for face-to-face interactions.

Face-to-face interactions are necessary for strong relationships, proper socialization, and the development of great communication skills. As our day-to-day becomes increasingly busy, we must keep in mind that despite the convenience our technology provides, it cannot replace the need for face-to-face communication altogether.

In-person interactions is one of the basic components of our social system. They are a significant part of individual socialization and consequently central to the development of groups and organizations composed of those individuals. In face-to-face interactions we are able to communicate with our whole bodies. Non-verbal cues are just as crucial as the words we say: our facial expression, posture, gesture, tone, and eye contact give powerful suggestions about what we’re trying to communicate — much is expressed through a simple smile or nod.

Face-to-face interactions provide better communication feedback loops: participants are able to give immediate visual feedback, which informs whether or not communicating parties are understanding each other and how participants feel about the discussion. In contrast, the appeal of written communication is that it allows us to craft our message with precision through extensive revisions and edits. Though this medium encourages greater accuracy, it does not allow us to gauge reader reception. Much of our tone is left to the reader’s imagination, which at times leads to miscommunication. Miscommunication is essentially inevitable, however, face-to-face interactions allow us to immediately identify and resolve misunderstanding.

Face-to-face interactions call us to be present by requiring our full attention: we can’t email, text, or be secretly distracted. Face-to-face interactions better allow people to address sensitive issues, which is necessary to build trust, empathy, and strong relationships. Face-to-face interactions are instances of shared real-life experience that can enhance future communication between people. These are some of the aspects that make face-to-face more meaningful than other methods of communication.

As we spread our online relationships wide and thin on social media, the value of each connection is lessened and the benefits we gain from each connection decreases. It is often difficult, if not impossible, on social media to recreate the conditions that define deep, intimate relationships. At best, our social media friends are a supplement, not a substitute, of real-life interactions with people. Real-life relationships help us learn about others and ourselves. Online friendships, while certainly valuable in many ways, do not satisfy our deepest needs for intimacy derived from proximity. We should seek out our online friends, rekindle lost connections, and revisit childhood friendships, as long as it is not at the expense of real-life relationships.

Our online interactions provide anonymity that is often used to create misleading identities. Written communication allows us to assemble our words until they fit the image we want to project, something that is more difficult to do in a real life conversation. Since we can craft and edit our message, it is much easier to be duplicitous or even self-deluding.

As the coming generations grow up with technology at their fingertips, we must encourage the development of real-life interpersonal skills. We’ve learned to boldly share and defend our thoughts and opinions online, but are apprehensive of doing so in person. We’ve gained social media skills while neglecting our people skills.

While technology has streamlined business communication and social media has provided us with glimpses into other people’s’ lives, we don’t want to forget the important, intimate parts of human connection that only face-to-face interaction provides. We are often distracted from and belittling our living experiences: we attend social functions and prefer to use our cell phones, tablets, and computers rather than engage in conversations. The technology that promised to bring us closer together, by connecting us better than we had ever been before, has instead made some of us reclusive and has put us in ideological echo chambers. This isolation has negative effects on our physical, mental, and emotional health. Having close and frequent connections with friends and community promotes healthy behaviors which encourage a more socially-oriented populace.

With the increase of communication technology, as a society we’ve lost the appreciation for the art of conversation and in person interactions. As a consequence, we have undermined our best tool for building deep, real-life, meaningful relationships. As our online networks grow, we increasingly feel lonely and disconnected from those around us. At some point we must acknowledge that we can’t have it both ways: we cannot be impersonal and expect to have deep connections. If we want deep connections with our community, we must be willing to work for them.

Free Intelligent Conversation is creating face-to-face conversations as a counter-cultural response to social isolation. We want to be present and remove distractions to have focused, uninterrupted dialogue. We appreciate conversation as an art form, relishing the beauty of communication subtleties that are only felt face-to-face. We want to look people in the eyes while we talk about sensitive issues. We want to read body language and end our interactions with handshakes and hugs. We want to celebrate with high fives, and feed off of the energy of the people around us. We want to witness people laughing out loud, shaking their heads, or rolling on the floor laughing.

We want to improve our ability to communicate and connect with people without hiding behind technology. Free Intelligent Conversation is not a protest against technology or social media; we just don’t want social media to become the crutch that our relationships lean on. Though we use our devices and screens to stay in contact, we organize in public spaces to celebrate life.

We want to be as intentional as we can about engaging with and maintaining relationships with our community and encourage others to do the same. We will provide a place where people — especially across generations — can meet, learn from each other, and build deep relationships. With Free Intelligent Conversation we want to encourage a culture of people who understand the value of face-to-face interactions, seek them out, and encourage their community to engage in them likewise.

Conversation with strangers is an invitation to see the world from another person’s perspective.

The world is a big crowd we often feel alone in. We have friends and family in certain pockets of the world, but most of our interactions involve strangers. We often feel isolated even though we share spaces with and are surrounded by people. This seems unnecessary, considering that strangers just are people we don’t know yet.

The people we know provide a sense of intimacy and connection, but it’s important to remember that strangers can also contribute to our sense of belonging. Even though momentary, a quick hello, or a nod of acknowledgement can make us feel less isolated. On some days this is our only sense of human connection — without it, we feel empty. Especially in large cities, talking to strangers reminds us that we all live there together.

The unexpected interruption of a conversation with a stranger disrupts the natural order of our day and calls us to our full attention. As a response, we have a heightened sense of awareness of the world around us. It calls us to be awake, in the moment, and attentive to our surroundings. Surprisingly, it is sometimes easier to share intimate parts of our lives with strangers than it is with people we know. When talking to strangers, we can be vulnerable because we feel that we have nothing to lose. We can openly share our stories, opinions, and secrets without the fear of long term consequences. In this context, you may find yourself sharing feelings you hadn’t talked about yet, or details about your life that only a few people know. Strangers are likely to reciprocate, which strengthens the connection. Overall, the heightened awareness and the freedom to be vulnerable facilitates intimacy between absolute strangers.

Conversation with strangers is an invitation to see the world from another person’s perspective. When we take the time to connect with strangers, even briefly, it moves us away from fear and towards empathy. People develop a preference for things merely because they are familiar with them. The more familiar we are with a person, the more we’re likely to interact with and have positive experiences with them. Positive experiences with one person from a given social group reduce prejudice toward the entire group to which that person belongs.

Talking with strangers is a life-changing experience, but is difficult to initiate for various reasons. For one, we often don’t have an “excuse” to start conversations. We also don’t know what’s acceptable behavior in this context since we don’t want to be perceived as disruptive or abrasive. These uncertainties, however, are what makes conversations with strangers captivating. Many of us would like to have more of these unexpected, intimate moments with strangers — we just don’t think we have a reason for them to happen. For those of us who are more willing to approach strangers, we need some kind of cue that lets us know we are welcome.

With Free Intelligent Conversation we provide an excuse to spark up conversation between strangers. We create places where strangers can meet, talk, and have public opportunities for positive interactions. We encourage strangers not only to talk with us, but also with each other. We believe that by making conversation with strangers more accessible we can positively transform both individuals and the communities, cities, and countries that they live in.

We know that getting strangers to talk to each other is a tall order. We’ve been told that we’re too idealistic. Some people think we want their money. Others think it’s a trick or hoax. Some people are suspicious and want to know “who we work for” or “why we’re really here.” Some find it impossible to conceive that there could be a group of people interested in just talking to others. Some recognize that — in some small way — we want to change the world; and they smile sympathetically at us, feeling sure that the world can’t really be changed like this.

What they don’t know is that we’ve been changed.

We’ve been inspired. We’ve broken out of our comfort zones. We’ve heard stories that have made us laugh, and we’ve had conversations that have brought us to tears. We’ve gotten dates and we’ve been offered jobs. We’ve learned how to be present. We’ve learned about different cultures, and how to appreciate them. We gained insight about ourselves. We learned how to disagree without becoming enemies. We’ve had long chats with our elders. We’ve been given great advice, and have been a listening ear to many. We’ve heard new ideas and we’ve heard dumb ideas. We’ve changed our long held opinions and we’ve let go of previously held prejudices. We’ve made great friends — all from taking time to talk with strangers.

We want to encourage people to have meaningful, face-to-face conversations and we will create designated places for them to happen. A place where we can learn about the experiences of others, and be vulnerable, honest, and inquisitive. We want to create these places in every city. Our hope is that these designated places will attract and nurture a particular kind of person. The kind of person who tries to learn from everyone they meet. The kind of person that cultivates authentic relationships, and puts away their devices to interact with the real world. The kind of person who looks for ways to engage with their community and broaden their social networks in meaningful ways. The kind of person who can communicate across generational, cultural, and ideological boundaries. We believe this kind of person will change the world for the better.

There are a lot of problems in the world and though we don’t have the answers to them, we believe that the first step towards a solution is to get people to talk to each other. We believe that we can solve these problems one conversation at a time.

If you see us holding a sign that reads “Free Intelligent Conversation,” come talk to us. About what? About anything and everything. The only thing needed for an intelligent conversation is willing individuals. We’re willing.

Are you?

—

Edit 8/7/2017: You can find a read-through of the Manifesto here:

—

13 TCA Takeaways: Goodbye ‘Shameless,’ ‘Saul’ and Hank Azaria’s Apu – Hello ‘LOTR’ Cast, More ‘AHS’

After 13 days, the 2020 Television Critics Association (TCA) Winter Press Tour has come to a close.

Below are 13 of our top takeaways from the never-ending TV media event occupying the Langham Huntington Pasadena ballroom. Thanks for all of the free coffee, but now we need some sleep.

Also Read: How HGTV's 'Extreme Makeover: Home Edition' Revival Will Avoid Original's Foreclosure Horror Stories

Some Awards Shows Have Hosts, Some Don’tThe Oscars don’t need a host, and the Golden Globes got two.

ABC chief Karey Burke told those in attendance that the 2020 Academy Awards will have “no traditional host” again this year. Hey, it sure worked out last year — check out these TV ratings.

We also got this year’s Emmys date from ABC. Nominations for the Sunday, Sept. 20 show will come out on Tuesday, July 14, ABC said, and “Host(s) and producers for the telecast will be announced at a later date.”

TheWrap asked network reps if that sentence in the press release means there will definitely be a host this year, to which a spokeswoman replied, “Details are not yet firm.”

You know what will definitely not go host-less? The 2021 Golden Globes. NBC announced at TCA that Tina Fey and Amy Poehler will return as hosts for the annual January kickoff to awards season.

Also Read: Winter TV Press Tour 2020: 'Modern Family' Final Bow, Paul Telegdy in Hot Seat and 'LOTR' Details at Last?

Extra RealityThanks in large to its hit reality import “The Masked Singer,” Fox won the fall in Nielsen ratings. How do you keep that momentum going?

For starters, Fox is debuting “Masked Singer” Season 3 immediately following its Super Bowl LIV, which means it will be all of, like, a month and a half between runs. In case that’s not enough “Masked” madness, Fox and Ellen DeGeneres are going into business together on a spinoff: “The Masked Dancer.”

Yes, that one is literally being adapted from an “Ellen” joke.

Not to be outdone, ABC is also spinning off its key unscripted property. Because “The Bachelor,” “The Bachelorette” and “Bachelor in Paradise” can’t consume all of primetime six nights a week, 52 weeks per year, ABC has ordered some kind of confusing new “Bachelor” installment: the music-based “Listen to Your Heart.” (Try to) Learn all about that here.

Also Read: Meet BYUtv, the 20-Year-Old TV Network You've Probably Never Heard Of

No Room for Cinemax at HBO MaxDespite the obvious name association, AT&T will not be bringing Cinemax to its upcoming streaming service, HBO Max. Not only that, but HBO Max chief Kevin Reilly said Cinemax will stop producing original content all together. We don’t know yet what that means for shows like “Jett” and “Warrior.” “Strike Back” debuts its eighth and final season Feb. 14.

Don’t worry, Cinemax will still be a channel. “I think it still serves an important value for its customers in terms of its movie offerings,” Michael Quigley, executive vice president of content acquisitions for HBO Max, said.

And though it wasn’t announced at TCA, we learned during TCA that AT&T’s Audience Network, home to “Mr. Mercedes,” “Condor” and “Loudermilk,” will also no longer be a home for original programming. Instead, AT&T is turning the premium network into a promo channel for HBO Max.

A rep for AT&T told TheWrap that “any future use of Audience Network content will be assessed at a later date.”

Also Read: Spectrum's 'Manhunt: Deadly Games' Gets Premiere Date, Trailer (Video)

No Plans for ‘Modern Family’ Spinoff Yet (But, We Know Who Would Be Up for It)Despite ABC chief Karey Burke’s hopes for some kind of continuation for the ABC series, which wraps up this spring, co-creator Steve Levitan told us nothing is planned.

“The short answer right now is, there are no plans,” he said.

When TheWrap followed up by asking which cast members would be interested in seeing their characters continue, both Reid Ewing (who plays Dylan) and Aubrey Anderson-Emmons (Lily) raised their hands. They’re two of the younger cast members in the large ensemble — “young” being relative on this family show, as Ewing is 31 years old and Anderson-Emmons is 12 — so at least there’d be a long runway for stories with their respective characters.

Julie Bowen, however, gave us her stipulations for continuing on as Claire Dunphy. “Is the spinoff as good as ‘Modern Family?’ Do we get to have the amazing writers? Do we get to have the amazing cast? The incredible hours? Do I get to work in LA and see my kids? Then yeah.”

Got all that, ABC?

Also Read: ViacomCBS Moves Pop TV Under Chris McCarthy, Brad Schwartz to Remain President

We’re Losing Some Shows…It just wouldn’t be TCA without hearing news that some of our favorite shows are ending, and the 2020s decade is apparently no different.

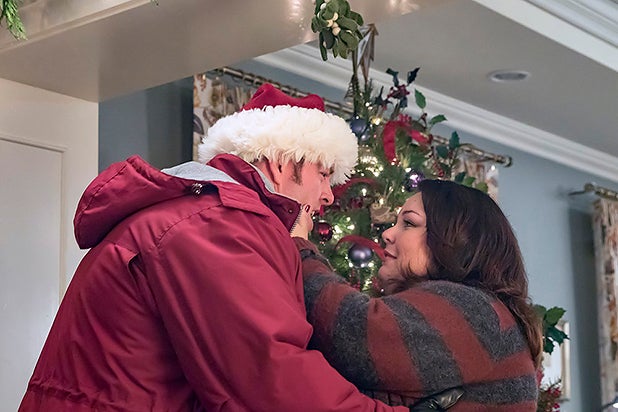

Showtime is finally bringing an end to “Shameless” after 11 seasons (and eyeing an end date for “Ray Donovan”), which will come this summer. Yes, that means that the William H. Macy-led drama will finish its 10th season and air its final run all in the same year. Why?

“We wanted it on earlier, because we wanted to strengthen our summer and we also wanted to provide a great lead-in for ‘On Becoming a God’ in its second season,” Showtime’s entertainment president Gary Levine explained to us. “Homeland” also ends its eight-season run beginning next month.

Meanwhile, AMC is getting ready to say goodbye to Albuquerque for the second time, announcing that its “Breaking Bad” prequel “Better Call Saul” will wrap up next year with its sixth and final season (it returns for Season 5 in February). That means that next year we’ll finally get that payoff for Gene Takovic, the alias that Saul — er, Jimmy, has been using for his post-“Breaking Bad” exploits.

Also Read: TNT Renews 'All Elite Wrestling: Dynamite' Through 2023, Will Adapt YouTube Series 'AEW Dark' for TV

…But There Are Still Too Many ShowsIn his annual survey of scripted programming on TV, FX chief John Landgraf revealed that the number of shows on TV surpassed the 500 mark for the first time in 2019. The coming years will surely only see that number rise, and networks made the most of their time these past two weeks teasing what they’ve got in the pipeline.

Here’s just a taste of what’s coming and when to expect it:

Also Read: 'One Day at a Time' on Pop TV Will Have to Sacrifice That Catchy Theme Song (Video)

Update on NBC’s Investigation Into Gabrielle Union’s ‘America’s Got Talent’ ExitNBC boss Paul Telegdy told reporters that the network’s ongoing investigation into Gabrielle Union’s contentious departure as a judge on “America’s Got Talent” should wrap up by the end of the month. “We’re very confident if we learn something… we’ll put new practices in place, if necessary, and we certainly take anyone’s critique of what it means to come to work here, incredibly seriously,” the executive said.

Union’s exit was accompanied by multiple news reports describing behind-the-scenes clashes between her and the show’s producers over what was described as a “toxic” workplace culture. Former “AGT” judges Howard Stern and Sharon Osbourne have since spoken out against the “boys’ club” environment on the show, which they said was facilitated by executive producer-turned-judge Simon Cowell.

For her part, former judge Heidi Klum says her experience on the show was nothing short of “amazing.”

“I didn’t experience the same thing,” she said. “To me, everyone treats you with utmost respect.”

Also Read: Pop TV Plots Post-'Schitt's Creek' Future: Comedy With 'Heart' Not 'Snark,' Brad Schwartz Says

Amazon Finally Casts Two of Its Most Anticipated ShowsAmazon’s push into big-budget blockbuster programming has been in the works for years, but the streamer finally shared some casting news for both its highly anticipated “Lord of the Rings” TV series and its international spy franchise from “Avengers” directors Joe and Anthony Russo.

“Quantico” star Priyanka Chopra and “Game of Thrones” alum Richard Madden have signed on to star on “Citadel,” the U.S. installment of the Russo Brothers’ franchise, which will be accompanied by three other interconnected local-language series based in Italy, India and Mexico.

The streamer’s “LOTR” series, meanwhile, cast a whopping 13 series regulars: Owain Arthur, Nazanin Boniadi, Tom Budge, Ismael Cruz Córdova, Ema Horvath, Markella Kavenagh, Joseph Mawle, Tyroe Muhafidin, Sophia Nomvete, Megan Richards, Dylan Smith, Charlie Vickers and Daniel Weyman. They join previously reported stars Robert Aramayo and Morfydd Clark in the eight-episode series.

Also Read: 'The Biggest Loser': Things Get Heavy During Contentious TCA Panel for USA Reboot

Multiplicity Is InMultiple networks picked up multiple shows for multiple seasons during this winter’s press tour.

Ryan Murphy’s “American Horror Story” is coming up on Season 10 — which TheWrap learned exclusively will feature the return of “AHS” staple Sarah Paulson — and has now been renewed for three more, meaning the anthology is guaranteed to run at least 13 seasons. (Isn’t that just poetic?)

Adding to the additional-seasons trend are NBC, which has ordered three more years of its Ryan Eggold-led medical drama “New Amsterdam,” TBS, which has picked up Seth MacFarlane’s “American Dad” for two more seasons, and Comedy Central, which has renewed “Tosh.0” for four more installments as part of the channel’s new first-look deal with creator Daniel Tosh.

Also Read: Former 'AGT' Judge Heidi Klum Weighs in on Flap Over Gabrielle Union Ouster

Why HBO Picked Dragons Over Naomi WattsHBO finally ordered one of its several potential “Game of Thrones” spinoffs to series last October — only it wasn’t the one everyone was expecting would get picked up. The pay TV channel scrapped its Naomi Watts-led “GoT” prequel referred to internally as “Bloodmoon” after shooting the pilot and opted to give a straight-to-series order to George R.R. Martin and “Colony” co-creator Ryan Condal’s “House of the Dragon,” which is a show about the Targaryens’ history.

When TheWrap sat down with HBO programming chief Casey Bloys, we asked why — and the answer wasn’t so simple.

“In general for a pilot, and this is very much the case in this one, there’s not one thing that I would say, ‘Oh, this went terribly wrong,'” Bloys told us. “Sometimes a pilot comes together, sometimes it doesn’t. Sometimes even the best aspects don’t totally gel, sometimes they do. That’s kind of the little bit of luck and magic in doing shows — and sometimes they come together and sometimes they don’t.”

“Bloodmoon” was co-created by Martin and “Kingsman” screenwriter Jane Goldman and set thousands of years before the events of the original “Game of Thrones” series. “House of the Dragon,” on the other hand, is based on Martin’s “Fire & Blood” book, which details the Targaryen family lineage, and takes place just 300 years before the events of “GoT.”

Bloys added: “One of the advantages of ‘House of the Dragon’ is you’ve got history and text from George in terms of the history of the Targaryens. So you had a little bit more of a roadmap. So that made it easier to say go straight to series on that. Also, in general with ‘Game of Thrones,’ one of the things going into it we knew — that we know from the development in general — is very few things you get right the first time. And so that’s why we did multiple scripts. And we would have been very fortunate had the one pilot worked and gone straight to series and that would have been that. But you also had to make plans for if that didn’t happen. So we wanted to have a lot of options, so that’s why we went in very deliberately trying to go at it a number of different ways.”

Also Read: 50 Cent Says He Advised Eminem to Not Respond to Nick Cannon: 'You Can't Argue With a Fool'

Seriously, Jussie Smollett Is Not Coming Back to ‘Empire’Fox would love for us all to stop bringing this up, but since “Empire” showrunner Brett Mahoney hasn’t shut the door on the idea, we had to ask again: Will Jussie Smollett reprise his role as Jamal for this spring’s series finale of the hip-hop drama?

“He will not be coming back,” Fox entertainment boss Michael Thorn told TheWrap. “As you would expect when you’re finishing an iconic series like ‘Empire,’ that Brett, as the showrunner, along with his producing partners, would certainly have discussions about what’s the best way to finish the show. In this case, Jussie will not be coming back for the finale.”

Smollett left the show toward the end of last season, shortly after Chicago law enforcement accused him of staging a high-profile hate crime against himself. The producers, responding to intense public pressure, wrote Smollett out of the final episodes of Season 5 despite protests from Smollett’s supporters on the cast.

“Our hope at Fox — and I know the producers feel the same way — is that the show, to us, is much bigger than some of the personal stuff that’s unfortunately happened for Jussie, where we just want the ending to be as epic as the beginning,” Thorn said.

Hank Azaria Is Officially Done With ApuThe “Brockmire” star confirmed once and for all that he will no longer be the voice of the Indian American convenience-store proprietor Apu Nahasapeemapetilon on Fox’s “The Simpsons.”

“I won’t be doing the voice anymore, that’s all we know. Unless there’s some way to transition it or something,” Hank Azaria told reporters after the panel for his IFC series, which is ending with its upcoming fourth season.

“What they’re going to do with the character is their call, it’s up to them, they haven’t sorted that out yet,” Azaria said of “The Simpsons” team. “All we agreed on is that I won’t do the voice anymore. We all had made the decision together, we all feel it was the right thing and good about it.”

Also Read: 'Morning Show' Producer Says 'No Update' on Steve Carell Returning for Season 2, But They're 'Exploring' It

Apple TV+ Joins the PartyApple TV+ had its first-ever appearance at TCA during this winter’s tour, landing the final day slot on Sunday, which isn’t exactly a coveted one, due to the fact you’re presenting to a room full of really tired journalists.

(We have to note here that before the new streaming service kicked things off in Pasadena, its direct competitor Disney+ started the morning with an interestingly timed investment in Twitter promotion. Now back to Apple, the belle of Sunday’s ball.)

The tech giant opened its presentations with a buff-looking Kumail Nanjiani and his wife and co-writer Emily V. Gordon, who appeared via satellite to discuss their series “Little America.” They and the other executive producers (Alan Yang, Lee Eisenberg, Sian Heder, Joshua Bearman) talked about how a show that depicts immigrants in an empathic light is inherently political, despite their efforts to focus on the character’s personal stories rather than the American immigration system.

Hilde Lysiak, the 13-year-old journalist who went viral with her exclusive report of a hometown murder in 2016, was on hand to discuss “Home Before Dark,” the drama series based on her investigative reporting in which Brooklynn Prince plays her and Jim Sturgess plays her father.

Also Read: Rob McElhenney Says the Characters in 'Mythic Quest' Are 'Real People' Compared to 'Sunny' Gang

Diversity was a big topic throughout Apple’s day, particularly during the panels for upcoming doscuseries “Visible: Out on Television” and the Kristen Bell and Josh Gad-voiced cartoon “Central Park.”

When “It’s Always Sunny in Philadelphia” creator Rob McElhenney and star David Hornsby showed up to talk about their gamer comedy “Mythic Quest: Raven’s Banquet” they were obviously immediately asked about “Sunny” — specifically, the similarities and differences between the two shows.

“The Morning Show” executive producer Michael Ellenberg said he has “no update” on the possibility of Steve Carell returning to the series for Season 2, but that they are “exploring” it — and everyone else remained tight-lipped about the next season, including Jennifer Aniston, Reese Witherspoon, Billy Crudup and director Mimi Leder.

Apple wrapped up its day with more eye-candy via satellite in the form of Chris Evans, who was on hand to promote his upcomign series “Defending Jacob.”

Four seasons into "This Is Us" and the only thing fans can rely on more than the fact that they're sure to get at least one twist or turn when they tune in each week is that they are definitely going to shed at least one -- and usually more -- tear per episode. Ahead of the NBC family drama's return from winter hiatus on Jan. 14, TheWrap has rounded up the show's biggest tearjerker moments -- both good and bad -- so far. Obviously, spoilers ahead.

Also Read: Winter TV 2020: Premiere Dates for New and Returning Shows (Photos)

NBC

How Randall became the third tripletEven before the big time-jump twist was revealed on the pilot of "This Is Us," the tears were already flowing when it was revealed that one of Jack and Rebecca's triplets didn't make it through childbirth. Struck by a moment of inspiration in grieving, the Pearsons decide to adopt a baby who had been abandoned and showed up at the hospital on the same day.

Also Read: ‘This Is Us’ Series Premiere Recap: The Big Twist Revealed

NBC

Jack's fateFirst, the show dropped the bombshell that Jack has been gone for so long that Randall's kids consider someone else entirely their "grandpa." We shouldn't have been surprised to learn, then, that the Pearson family patriarch is actually dead, and had been since about 2006. Kate seems to have a hard time moving on from it, still maintaining a tradition of watching every Steelers game with her dad -- even if it's just his ashes left now.

Also Read: ‘This Is Us’ Recap: Jack’s Fate Revealed as Family Secrets Spill

NBC

The truth about WilliamWe knew William was dying pretty early on, but that didn't make him trustworthy. It took Beth gently chiding Randall about his inherent goodness and William's shady behavior to get the truth out on the table: William isn't still doing drugs, he's been disappearing for hours on end each day in order to take the bus to his house to feed his cat. "Well now I feel like a bitch," Beth quipped, and we wept.

Also Read: ‘This Is Us’ Recap: Randall Gets His Name, and an Origin Story

NBC

Toby's pastThe heartbreaking moments don't belong exclusively to the Pearsons. When Kate pushes, Toby reveals exactly why he's not with his gorgeous ex-wife Josie anymore: Turns out, she was so horrible to him that he became suicidal and gained 100 pounds in a year. Ouch.

Also Read: ‘This Is Us’ Recap: ‘The Big Three’ Introduces a New Mystery

NBC

Kate the princessAfter being ostracized by her friends in the cruelest way possible, little Kate's spirit was brought back to life thanks to a bit of magical storytelling by Jack, who hands her his t-shirt and tells her she can be anything she wants when she wears it, though she's always a princess in his eyes. Who's chopping onions around here?

Also Read: ‘This Is Us’ Recap: ‘The Big Three’ Introduces a New Mystery

NBC

Rebecca and ShakespeareFinding herself unable to bond with her adopted son after the death of one of her triplets, Rebecca seeks out the baby's birth father instead of telling her husband. Their heart-to-heart, where she refused his request for visitation and he gives her the inspiration to name the baby Randall, is one of the show's most gut-wrenching so far.

Also Read: ‘This is Us’ Review: Dan Fogelman Mines Great Melodrama From Everyday Life

NBC

Randall just wants to fit inWhen the Pearsons discover little Randall is gifted, it seems like a great thing, until Jack gets it out of him that he's been pretending to be not as smart as he is in order to fit in with his siblings.

NBC NBC

NBC NBC

NBC

Randall's trip down memory laneOnly on this show could a bad hallucinogenic mushroom take the characters and the audience on a gut-wrenching emotional journey. As Randall reels from his mother's betrayal, a vision of his dead father takes him down memory lane to reveal just how hard and lonely it must have been for his mother to keep such a secret to herself.

Also Read: ‘This Is Us': Jack Pearson Is Old, Grey and Not Dead in Season 2 Finale Promo (Video)

NBC NBC

NBC NBC

NBC

Kate and the rage-drumsDuring a seemingly hokey fat camp exercise, Kate taps into some very real emotions, mostly about her father's death, and lets out a primal scream so heartbreaking we still can't get it out of our minds and ears.

NBC

Trouble in paradiseAfter an episode where their happy marriage was contrasted to Miguel and Shelly's crumbling relationship, Jack and Rebecca were left off on an ominous note when she revealed she wants to go on tour with her band -- led by ex-boyfriend Ben.

NBC

Randall's breakdownThe stress of work and William's impending death finally takes its toll on Randall, who has a full-blown breakdown. The scene is made all the more emotional when the ever-self-absorbed Kevin picks up on the strain in his brother's voice and races to his side to cradle him in his arms as he cries.

NBC

All of "Memphis"

William finally passes at the end of this heartbreaking episode, which centers around his road trip home to Memphis with Randall. The hour is spent going between flashbacks of his youth and the road he traveled to leave his mother and music career behind and get mixed up with drugs. Randall is with his biological father when he passes and the drive back home is both the most cathartic and heart-wrenching scene we've ever watched.

Jack and Rebecca's separation

Though the break was ultimately a very short one, as Rebecca soon came to pick Jack up from Miguel's place, the end of Season 1 left us on a cliffhanger as we wondered if and when Rebecca and Jack would reunite after a huge fight. Trying to keep from bawling for the next few months as we awaited the answer at the beginning of Season 2 was our biggest problem over the summer hiatus.

NBC

Kate's miscarriage

This episode packed more of a punch than anyone was ready for, even though we found out at the end of the previous episode what was to come. Watching Kate lose her and Toby's baby in "Number Two" and the different ways they chose to grieve was truly a once in a lifetime TV experience. But it was definitely something we only wanna witness once.

NBC

Jack's father's death

To say that Jack and his father had a tumultuous relationship would be an understatement. But when we saw him on his death bed we forgot all about their horrible history and watched Kate and Rebecca say goodbye to a man they never knew because Jack wasn't there.

NBC

Kevin and Sophie's break-up

Seeing as we have yet to witness how these two lovebirds originally split, it was really hard to watch Kevin dump Sophie due to his new addiction. The two were high school sweethearts who divorced at a young age after Kevin cheated and we don’t know what went wrong but after this upsetting scene we don’t know if fans can handle seeing the first go round.

NBC

Kevin's addiction

Kevin developed an addiction to painkillers and alcohol following an on-set injury at the beginning of Season 2. After watching “Number One” and learning how his already bad knee was ruined during a football game — thus ending his dream career — the tears started flowing and haven’t let up since.

NBC

Drunk driving with a stowaway

Remember the time that Kevin was upset and drove off from his brother Randall’s house drunk? Yep, that was a dumb move. But what made it even worse was the little stowaway in the backseat. Yes, the midseason finale of Season 2 saw Kevin behind the wheel of a car while under the influence with Tess right there behind him. She snuck away when Randall and Beth were saying goodbye to Deja, a teenager they were fostering who was taken back by her biological mother. While we knew before the end of the episode everyone was safe (thank goodness), Kevin was arrested on a DWI -- in front of his niece. And of course Randall and Beth were waiting at home to kill him. Now these were probably some rage tears.

NBC

Rehab scene/The Big Three "bench moment"

“The Fifth Wheel” delivers one of the most tense moments of the series, when Rebecca and the Big Three enter a family therapy session while Kevin is in rehab. Kevin shares that he felt like a fifth wheel growing up because Kate had Jack and Randall had Rebecca (this is a scenario anyone who comes from a three-sibling family can probably relate to). Rebecca ends up admitting that yes, she was closer with Randall because he was “easier” to parent. It’s a tough blow, and everyone leaves the session pretty pissed at each other. But the siblings convene later on in the episode to look back objectively on their childhood and all is well, leaving us touched and sad all at once.

NBC

The Sidekicks/"Star Wars" speech

Meanwhile, Toby, Beth and Miguel decide to get some drinks, as they are not invited to family therapy. They bond over feeling excluded from the crazy-tight bond the remaining Pearsons have with one another, and compare themselves to the side characters in “Star Wars.” But when Beth and Toby start to talk about how they were never able to meet Jack, and feel like his kids put him on a pedestal, Miguel sweeps in in Jack’s defense and shuts that conversation down -- reminding us, again, of the giant hole Jack left after his death.

NBC

Kate's dog issues

Kate and that dog. Have you ever seen someone more conflicted about something so adorable? We first learned of Kate’s serious issues with canine’s when she just couldn’t bring herself to adopt the cutest furry friend for her fiancé Toby, who desperately wants a dog of his own. She got so close to picking him up, but then bailed, prompting a heavy flood of tears from viewers. However, she ultimately decided making Toby happy was more important than her issues and the tears came even harder when she revealed the dog, Audio, to his new owner.

Also Read: ‘This Is Us': Crock-Pot Tells ‘Heartbroken’ Fans Product Is Safe After Terrifying Episode

NBC

That damn slow cooker

We'd like to start out by saying sorry to both the fans and Crock-Pot for the amount of anguish this cost them. At the end of the episode "That'll Be the Day" -- which recounts Jack's last day alive, we find out that a very old slow cooker with a faulty switch was what ignited the fire that burned down the Pearson family home. Yes, Crock-Pot took the heat for that one to the point where Milo Ventimiglia and the show graciously stepped in to do a promo for the product to prove it was totally Jack Pearson-approved.

Also Read: Milo Ventimiglia Explains How (and Why) ‘This Is Us’ Did That Last-Minute Crock-Pot Promo

NBC

Jack’s cause of death/Rebecca keeping it together for the kids

In the big post-Super Bowl episode (aptly named “Super Bowl Sunday”), we finally learn the true cause of Jack’s death. Jack and the rest of the family make it out of the house fire safely, and Jack even had time to go back in to save Kate’s dog and a few other family keepsakes. But that’s just what turned out to be his downfall: he inhaled too much smoke, causing him to have a heart attack at the hospital and die unexpectedly. Mandy Moore delivers the most heartbreaking scene at the end of the episode when Jack dies: not only do we see her break down completely in the hospital, but she’s able to pull it together to tell the kids. The whole thing left us crying into our leftover guacamole from the Super Bowl.

Also Read: ‘This Is Us’ Hits 27 Million Viewers Right in the Feels With Post-Super Bowl Tearjerker

NBC

Literally "The Car" -- just, yeah, "The Car"

This whole episode was even harder to watch than “Super Bowl Sunday.” We’ve been ugly crying at every mention of Jack’s death since Season 1, but seeing Dr. K console Rebecca not only emphasized the fact that Jack was dead but reminded us that he was able to finally find love again after his wife died. When Rebecca took the kids to Jack’s tree, and then they all agree to go to the Bruce Springsteen concert in his honor, we were a puddle on the floor.

Also Read: Milo Ventimiglia, Crock-Pot ‘Overcome’ Their Differences in New Super Bowl Ad (Video)

NBC

Kate, Randall, Lean Pockets and “Sex & the City”

Leave it to "This Is Us" to make an episode about bachelor and bachelorette parties about sibling love. In "Vegas, Baby" we see why Kate has never really become close with her sister-in-law Beth. Turns out, Kate and Randall were very close as teens -- Lean Pocket and "Sex & the City" viewing parties, of course -- and when he met Beth, she knew she'd "lose him." Of course Randall reassures his sister their bond just can't be broken. Also, he wasn't really a "Sex & the City" fan, he just watched it to spend time with Kate. Aww.

NBC

That Deja episode

What is it with falling in love with the grandparents on this show? In “This Big, Amazing, Beautiful Life,” we get to learn a lot more about Deja’s backstory and tough upbringing--and meet her amazing grandma Joyce, who mostly takes care of her because her mom, Shauna, was only 16 years old when she had Deja. When Joyce dies, it marks the point where things really start to go downhill for Deja and Shauna, and they end up sleeping in their car until Beth and Randall find them. When Shauna realizes Deja can have a better life living with the Pearsons and leaves in the middle of the night, it broke us all.

Also Read: ‘This Is Us': Jack Pearson Is Old, Grey and Not Dead in Season 2 Finale Promo (Video)

NBC NBC

NBC

When Deja vandalizes Randall's car

At Toby and Kate's wedding, Toby's mom mistakes Deja for Randall's biological daughter, and let's just say the mistake does not make her happy. Deja's already upset, given that her mom's parental rights have been revoked, and she takes out her emotions by taking a baseball bat to her foster dad's fancy car -- which hits us right in the heart strings.

Also Read: Watch ‘This Is Us’ Cast Read for Their Now-Iconic Roles in Old Audition Tapes (Video)

NBC

Jack talking to little Kate

As Kate walks down the aisle, we hear a voiceover of Jack talking to a younger "Katie-girl."

"One day, a long time from now, you're going to meet someone who's better than me," Jack tells her after she asks if she can marry him someday. "He's gonna be stronger, and handsomer, maybe better at board games than me. And when you find him, when you find that guy, that's the guy you're going to marry." But no one could be as strong and handsome as you, Jack!! Needless to say, this scene juxtaposed with Kate walking down the aisle had us in a puddle of tears.

Also Read: ‘This Is Us’ Season 3 Sneak Peek: See How Jack and Rebecca Spent Their Very First Date (Video)

NBC

"It's time to go see her, Tess"

Hold. On. We've added a third timeline to this story? Of course we have. At the very end of the Season 2 finale, we get a quick glimpse of future Tess and an older Randall says ominously, "It's time to go see her, Tess."

Tess responds that she's not ready, and Randall says he's not either -- but we are ready to find out who the mystery "her" is. The scene is cut in a way that makes us think Beth might be dying (but that was ruled out by Susan Kelechi Watson herself) or something with Deja, but we know enough by now to expect a twist. Just seeing older Tess and Randall together is enough to make us teary.

Also Read: ‘This Is Us': 16 New Season 3 Premiere Photos to Make You Giddy for Pearson Return

NBC

Kate and Toby are rejected by an IVF doctor

In the first episode of Season 3, Kate and Toby find out they are insufficient candidates for in vitro fertilization -- on her birthday.

NBC

Jack and Rebecca's $9 first date

The night is a disaster due to Jack's low budget, which he doesn't want to tell Rebecca about until the very end. But when he does, all is forgiven.

NBC

When Future Randall called Future Toby about "her"

Cause we all thought it was Kate and OMG.

NBC

Kate gets pregnant again -- with "one shot" from IVF

That positive test brought on the waterworks.

NBC

Kevin finds out where Jack's necklace came from

The necklace Kevin received from his dad when he broke his leg originally belonged to a Vietnamese woman who Jack helped when he was in the war. So sweet.

NBC

Jack and Rebecca's road trip to L.A.

Oh, so many romantic moments. So many tears.

NBC

Tess comes out to Kate -- and later to Beth and Randall

In a very special episode on a show full of very special episodes, Tess gets her first period and confesses to her aunt that she has feelings for girls. Not longer after, she comes out to her parents and it's impossible to not weep over how beautiful the moment is.

NBC

When "Her" is finally revealed to be Rebecca

Don't pretend like hearing Future Beth say everyone was going to see "Randall's mother" didn't kill you.

NBC

When we found out Nicky was alive

And everything changed.

NBC

The toll Randall's campaign for city council takes on his family

We really did get worried about Randall and Beth there for a few episodes.

NBC

When Nicky finds out Jack is dead

In his first-ever meeting with his adult niece and nephews. His subsequent first-ever meeting with Rebecca packs even more of a punch, for both Nicky and the audience.

NBC

Beth's backstory episode

The whole thing. All of it. Her tireless effort at a teen dancing career, her dad's death, the moment she bumps into Randall at college, present-day Beth going to a dance studio. Everything.

NBC

The whole family waits to see if Kate and her premature baby are going to be OK

The things that come out in "The Waiting Room" episode. And then Kate and Toby name their baby Jack. Wow.

NBC

Randall and Beth's love story, juxtaposed with their current relationship issues

"R&B" forever and ever and ever.

NBC

Kevin and Zoe breakup

Because he wants kids and Zoe knows it, so she ends it and it's really rough to watch.

NBC

The first time we see Future Rebecca

And obviously begin to worry more about her clearly failing health.

NBC

The reveal that Baby Jack is blind -- and an incredibly talented singer in the future

The one-two punch of the Season 4 premiere's big reveal rivaled that of the emotional rollercoaster that the series premiere took us on. That song? Tears.

NBC

Deja and Malik's adorable young love

Their adorable ditch day in Philly was one of the sweetest teen love stories we've ever seen. Of course the real tears came when their parents tried to put the breaks on the whole thing.

NBC

Rebecca's dad making it clear he doesn't think Jack is good enough for his daughter

We know that they make it through fine -- obviously -- but this backstory is still rough to watch.

NBC

Nicky, Kevin and Cassidy getting sober together

Multiple moments from this arc have had us sobbing, as these three keep banding together to help each other through some really rough battles to stay clean.

Jack getting jealous over Randall's relationship with his only Black teacher

Jack realizes he can't be everything for his son, because he isn't exactly like his son. But when he accepts this, he learns how to let Randall connect with others who can give him what he needs.

NBC

Rebecca admitting to Randall her memory is getting so bad she needs help

After an entire Thanksgiving episode in which she gets lost -- and we find out that is actually a flashforward to a point when the problem has gotten much more series.

NBC

Slow cookers, road trips, miscarriages, house fires — where does it end?!?

Four seasons into "This Is Us" and the only thing fans can rely on more than the fact that they're sure to get at least one twist or turn when they tune in each week is that they are definitely going to shed at least one -- and usually more -- tear per episode. Ahead of the NBC family drama's return from winter hiatus on Jan. 14, TheWrap has rounded up the show's biggest tearjerker moments -- both good and bad -- so far. Obviously, spoilers ahead.

Also Read: Winter TV 2020: Premiere Dates for New and Returning Shows (Photos)

Actually, it’s about Ethics, AI, and Journalism: Reporting on and with Computation and Data

Photo | Scanned from the 1964 handout for the IBM Pavillion at the World's Fair. Scan from Jeff Roth, The New York Times.

We live in a data society. Journalists are becoming data analysts and data curators, and computation is an essential tool for reporting. Data and computation reshape the way a reporter sees the world and composes a story. They also control the operation of the information ecosystem she sends her journalism into, influencing where it finds audiences and generates discussion.

So every reporting beat is now a data beat, and computation is an essential tool for investigation. But digitization is affected by inequities, leaving gaps that often reflect the very disparities reporters seek to illustrate. Computation is creating new systems of power and inequality in the world. We rely on journalists, the “explainers of last resort”[1], to hold these new constellations of power to account.

We report on computation, not just with computation.

While a term with considerable history and mystery, artificial intelligence (AI) represents the most recent bundling of data and computation to optimize business decisions, automate tasks, and, from the point of view of a reporter, learn about the world. (For our purposes, AI and “machine learning” will be used interchangeably when referring to computational approaches to these activities.) The relationship between a journalist and AI is not unlike the process of developing sources or cultivating fixers. As with human sources, artificial intelligences may be knowledgeable, but they are not free of subjectivetivity in their design — they also need to be contextualized and qualified.

Ethical questions of introducing AI in journalism abound. But since AI has once again captured the public imagination, it is hard to have a clear-eyed discussion about the issues involved with journalism’s call to both report on and with these new computational tools. And so our article will alternate a discussion of issues facing the profession today with a “slant narrative” — indicated because these sections are in italics.

The slant narrative starts with the 1964 World’s Fair and a partnership between IBM and The New York Times, winds through commentary by Joseph Weizenbaum, a famed figure in AI research in the 1960s, and ends in 1983 with the shuttering of one of the most ambitious information delivery systems of the time. The simplicity of the role of computation in the slant narrative will help us better understand our contemporary situation with AI. But we begin our article with context for the use of data and computation in journalism — a short, and certainly incomplete, history before we settle into the rhythm of alternating narratives.

Data, Computation, and Journalism

Reporters depend on data, and through computation they make sense of that data. This reliance is not new. Joseph Pulitzer listed a series of topics that should be taught to aspiring journalists in his 1904 article “The College of Journalism.” He included statistics in addition to history, law, and economics. “You want statistics to tell you the truth,” he wrote. “You can find truth there if you know how to get at it, and romance, human interest, humor and fascinating revelations as well. The journalist must know how to find all these things—truth, of course, first.”[2] By 1912, faculty who taught literature, law, and statistics at Columbia University were training students in the liberal arts and social sciences at the Journalism School, envisioning a polymath reporter who would be better equipped than a newsroom apprentice to investigate courts, corporations, and the then-developing bureaucratic institutions of the 20th century.[3]

With these innovations, by 1920, journalist and public intellectual Walter Lippmann dreamt of a world where news was less politicized punditry and more expert opinion based on structured data. In his book Liberty and the News, he envisioned “political observatories” in which policy experts and statisticians would conduct quantitative and qualitative social scientific research, and inform lawmakers as well as news editors.[4] The desire to find and present facts, and only facts, has been in journalism’s DNA since its era of professionalization in the early 20th century, a time when the press faced competition with the new—and similarly to photography and cinema, far more visceral—media: the radio.[5] It was also a time when a sudden wave of consolidations and monopolization rocked the press, prompting print journalists to position themselves as a more accurate, professional, and hopefully indispensable labor force. Pulitzer and William Randolph Hearst endowed journalism schools, news editor associations were formed and codes of ethics published, regular reporting beats emerged, professional practices such as the interview were formed, and “objectivity” became the term du jour in editorials and the journalistic trade press.[6]

“Cold and impersonal” statistics could “tyrannize over reason,”[7] Lippmann knew, but he had also seen data interpreted simplistically and stripped of context. He advocated for journalists to think of themselves as social scientists, but he cautioned against wrapping unsubstantiated claims into objective-looking statistics and data visualizations. However, it took decades until journalism eased into data collection and analysis. Most famously, the journalist and University of North Carolina professor Phillip Meyer promoted the new style in journalistic objectivity, known from the title of his practical handbook as “precision journalism.”[8]

Precision journalism gained popularity as journalists took advantage of the Freedom of Information Act (FOIA), passed in 1967, and used it and other resources to make up for some chronic deficiencies of shoe-leather reporting. Meyer recounts reporting with Louise Blanchard[9] in the late 1950s on fire and hurricane insurance contracts awarded by Miami’s public schools. Nobody involved in the awards would talk about the perceived irregularities in the way no-bid contracts were granted, so Meyer and Blanchard analyzed campaign contributions to school board members and compared them to insurance companies receiving contracts. The process required tabulation and sorting—two key components at which mainframe computers would excel a decade later.[10] Observing patterns—as opposed to single events or soundbites from interviews—introduced one of the core principles of computer assisted reporting (CAR): analyzing the quantitative relationship between occurrences, as opposed to only considering those occurrences individually.

As computation improved, most reporters began to use spreadsheets and relational databases to collect and analyze data. This new agency augments investigative work: individual journalists can interpret, collect, and receive data to characterize situations that might otherwise be invisible. Meyer’s early stories helped make computation feel like the logical, and increasingly necessary, work of a reporter. But this is only a beginning for the advances in reporting and news production that computation represents for the profession. There is more ahead for computational journalism—that is, journalism using machine learning and AI—and we will explore the complexities in the remainder of this article.

This historical presentation has been brief and incomplete, but it is meant to provide a context for the use of data and computation in journalism. We are now going to retreat to a point in the middle of this history, the early 1960s, and begin our narrative with the 1964 World’s Fair.

1964—An elaboration of human-scale acts

For the IBM Pavilion at the 1964 World’s Fair in New York, Charles Eames and Eero Saarinen designed an environment that was meant to demystify the workings of a computer, cutting through the complexity and making its operations seem comprehensible, even natural. The central idea was that computer technologies—data, code, and algorithms—are ultimately human.

“… [W]e set forth to create an environment in which we could show that the methods used by computers in the solution of even the most complicated problems are merely elaborations of simple, human-scale techniques, which we all use daily.

“… [T]he central idea of the computer as an elaboration of human-scale acts will be communicated with exciting directness and vividness.”

Designer’s Statement by Charles Eames and Eero Saarinen, 1964

At the heart of their pavilion was The Information Machine, a 90-foot high ovoid theater composed of 22 staggered screens that filled a visitor’s field of view. The audience took their seats along a steep set of tiers that were hoisted into the dome theater. The movie, or movies, that played emphasized that the usefulness of computation goes beyond any specific solution. The film is really about human learning as a goal—about how we gain insight from the process leading to and following a computation. The next quote comes from an IBM brochure describing The Information Machine—it is the film’s final narration followed by the stage direction for its close.

Narrator: “…[T]he specific answers that we get are not the only rewards or even the greatest. It is in preparing the problem for solution, in these necessary steps of simplification, that we often gain the richest rewards. It is in this process that we are apt to get an insight into the true nature of the problem. Such insight is of great and lasting value to us as individuals and to us as society.”

With a burst of music, the pictures on the screens fade away, your host comes back to say goodbye. Below you, the great doors swing open… The show is over.

IBM Description of “The Information Machine”, 1964

In subsequent reviews of the pavilion, the design of The Information Machine was said by some critics to walk a fine line, attempting to make the computer seem natural on the one hand, but conveying its message through pure spectacle on the other—with the dome theater, the coordinated screens, and the large, hydraulic seating structure. The end effect is not demystification but “surprise, awe and marvel.”[11] In contemporary presentations of computation, and in particular AI and machine learning, we often lose the human aspects and instead see these methods just with “surprise, awe and marvel” — a turn that can rob us of our agency to ask questions about how these systems really operate and their impacts.

Contemporary Reporting and Its Relation to AI

Creating data, filling the gaps

The commentary in Eames’ film reminds us that computational systems are human to the core. This is a fact that we often forget, whether or not it is expressed in an advertising campaign for IBM. Reporting depends on a kind of curiosity about the world, a questioning spirit that asks why things work the way they do. In this section, we describe how journalists employ AI and machine learning to elaborate their own human-scale reporting. How does AI extend the reach of a reporter? What blind spots or inequalities might the partnership between people and computers introduce? And how do we assess the veracity of estimations and predictions from AI, and judge them suitable for journalistic use?

The job of the data or computational journalist still involves, to a large extent, creation of data about situations that would otherwise be undocumented. Journalists spend countless hours assembling datasets: scraping texts and tables from websites, digitizing paper documents obtained through FOIA requests, or merging existing tables of data that have common classifiers. While we focus on the modeling and exploration of data in this section, some of the best reporting starts by filling gaps with data sets. From the number of people killed in the US by police[12] to the fates of people returned to their countries of origin after being denied asylum in the US,[13] data and computation provide journalists with tools that reveal facts about which official records are weak or nonexistent.

In another kind of gap, computational sophistication is uneven across publications. Some outlets struggle to produce a simple spreadsheet analysis, while large organizations might have computing and development resources located inside the newsroom or available through an internal R&D lab. Organizations such as Quartz’s AI Studio[14] share their resources with other news organizations, providing machine learning assistance to reporters. Journalism educators have slowly begun to include new data and computing tools in their regular curricula and in professional development. Many of the computational journalism projects we discuss in this article are also collaborations among multiple newsrooms, while others are collaborations with university researchers.

The Two Cultures

Querying data sources has become an everyday practice in most newsrooms. One recent survey found that almost half of polled reporters used data every week in their work.[15] Reporters are experimenting with increasingly advanced machine learning or AI techniques, and, in analyzing these data, also encounter different interpretations of machine learning and AI. As statistician Leo Breiman wrote in 2001, two “cultures” have grown up around the questions of how data inform models and how models inform narrative.[16]

Breiman highlights the differences between these two groups using a classical “learning” or modeling problem, in which we have data consisting of “input variables” that, through some unknown mechanism, are associated with a set of “response variables.”

As an example, political scientists might want to judge Democratic voters’ preferences for candidates in the primary (a response) based on a voters’ demographics, their views on current political topics like immigration, characteristics of the county where they live (inputs). The New York Times recently turned to this framework when trying to assess whether the Nike Vaporfly running shoe gave runners an edge in races (again, a response) based on the weather conditions for the race, the gender and age of the runner, their training regime and previous performances in races (inputs).[17] Finally, later in the article we will look at so-called “predictive policing,” which attempts to predict where crime is likely to occur in a city (response) based on historical crime data, as well as aspects of the city itself, like the location of bars or subway entrances (inputs).

Given these examples, in one of Breiman’s modeling cultures, the classically statistical approach, models are introduced to reflect how nature associates inputs and responses. Statisticians rely on models for hints about important variables influencing phenomena, as well as the form that influence takes, from simply reading the coefficients of a regression table, to applying post-processing tools like LIME (Local Interpretable Model-Agnostic Explanations)[18] to describe the more complex machine learning or AI models’ dependence on the values of specific variables. In this culture, models are rich with narrative potential—reflecting something of how the system under study operates—and the relationship between inputs and outputs offer new opportunities for reporting and finding leads.

The second modeling approach deals more with predictive accuracy — the performance of the model is important, not the specifics of how each input affects the output. In this culture, a model does not have to bear any resemblance to nature as long as its predictions are reliable. This has given rise to various algorithmic approaches in which the inner workings of a model are not immediately evident or even important. Instead, the output of a model—a prediction—carries the story. Journalists using these models focus on outcomes, perhaps to help skim through a large data set for “interesting” cases. We can judge the ultimate “fairness” of a model, for example, by examining the predicted outcomes for various collections of inputs. Since Breiman’s original paper in 2001 the lines between these two cultures have begun eroding as journalists and others call for explainability of algorithmic systems.

Classical Learning Problems and Narratives